You are on a Zoom call when suddenly the audio lags behind the video. Your colleague's lips move, but it looks like a dubbed movie - a minor inconvenience. Yet this turns detrimental for scientific experiments using virtual reality (VR).

For example, if you are a researcher employing VR to reproduce the cognitive benefits of exercise for bedbound patients, lag in visual and audio stimuli can sabotage your data and results.

Thus, as VR's use in human behavioral studies increases, the need for visual and auditory stimuli to be presented with millisecond accuracy and precision grows.

A research team from Tohoku University has measured the accuracy and precision of visual and auditory stimulus in modern VR head-mount displays (HMD) that use the programming language Python.

Details of their research were published in the journal Behavior Research Methods on August 3, 2021.

"Most standard methods in laboratory studies are not optimized for VR environments," said paper co-author Ryo Tachibana. "Rather than a specialized software that enables greater experimental control, most VR studies employ Unity or Unreal Engine, which are 3D game engines."

Establishing better-suited VR environments, where researchers possess the flexibility to control and adjust them according to their experiments, would lead to more reliable results.

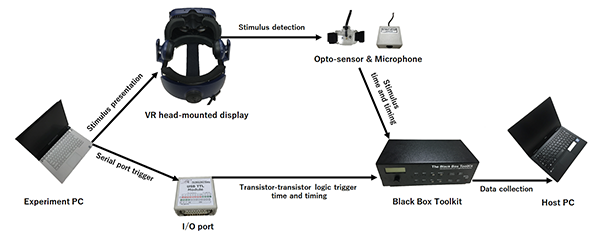

Tachibana and Kazumichi Matsumiya harnessed the latest Python tools for VR experiments and a special measuring device for stimulus timing known as a Black Box Tool Kit.

They recorded 18 millisecond (ms) time lags for visual stimulus in modern VR HMDs. For auditory stimulus, they noted a time lag of between 40 and 60 ms, depending on the HMD. Jitter, the standard time lag deviation, was recorded at 1 ms for visual stimulus and 4 ms for auditory stimulus. All the results were consistent in both Python 2 and 3 environments.

Tachibana points out that to date there has been almost no empirical data that evaluates the accuracy and precision of VR environments, despite its adoption in behavior research proliferating.

"We believe our study benefits researchers and developers who apply VR technology as well as studies on rehabilitation tools that require high timing accuracy for recording biological data," added Tachibana.

- Publication Details:

Title: Accuracy and precision of visual and auditory stimulus presentation in virtual reality in Python 2 and 3 environments for human behavior research.

Authors: Ryo Tachibana & Kazumichi Matsumiya

Journal: Behavior Research Methods

DOI: 10.3758/s13428-021-01663-w

Contact:

Ryo TachibanaDepartment of Human Information Sciences, Graduate School of Information Sciences

Email: ryo.tachibana

tohoku.ac.jpWebsite: http://www.cp.is.tohoku.ac.jp/~eng/index.html

tohoku.ac.jpWebsite: http://www.cp.is.tohoku.ac.jp/~eng/index.html